LLM Foundations (LLM Bootcamp)

In this video, Sergey covers the foundational ideas for large language models: core ML, the Transformer architecture, notable LLMs, and pretraining dataset composition.

Download slides from the bootcamp website here: https://fullstackdeeplearning.com/llm-bootcamp/spring-2023/llm-foundations/

Intro and outro music made with Riffusion: https://github.com/riffusion/riffusion

Watch the rest of the LLM Bootcamp videos here: https://www.youtube.com/playlist?list=PL1T8fO7ArWleyIqOy37OVXsP4hFXymdOZ

00:00 Intro

00:47 Foundations of Machine Learning

12:11 The Transformer Architecture

12:57 Transformer Decoder Overview

14:27 Inputs

15:29 Input Embedding

16:51 Masked Multi-Head Attention

24:26 Positional Encoding

25:32 Skip Connections and Layer Norm

27:05 Feed-forward Layer

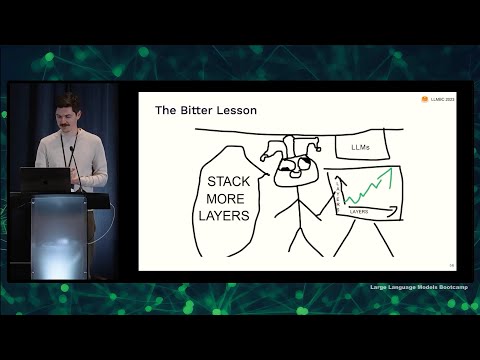

27:43 Transformer hyperparameters and Why they work so well

31:06 Notable LLM: BERT

32:28 Notable LLM: T5

34:29 Notable LLM: GPT

38:18 Notable LLM: Chinchilla and Scaling Laws

40:23 Notable LLM: LLaMA

41:18 Why include code in LLM training data?

42:07 Instruction Tuning

46:34 Notable LLM: RETRO

2023-06-20T11:17:48Z1280

https://www.youtube.com/embed/MyFrMFab6bo